Seeing Through the Dark

2min 34sec

This is an experimental video that explores the assemblages of material in tracing the past to reimagine the invisible into visible. The project began with digitizing a historic building in Shanghai, China, and activating the past with 3D technologies to address questions of the performativity and non-linearity of memory. Viewing the urban landscape as a palimpsest, the assemblages of layers in the process of urbanization and gentrification lead me to explore the invisible layers in the urban landscape. I used 16mm Bolex camera to re-capture the digital preservation, hand-processed the film footages, and reimagied the scratches and chemical contamination left on the 16mm hand-processed films with AI. This experimental video piece is co-created by the artist, machine algorithms, and chemicals. This co-creation process raises a question: how do we make the invisible visible with machine algorithms?

With generative AI, the imperceivable past is reformulated into different forms, alluding to the micro and macro universe in abstraction. The title, “Seeing Through the Dark,” alludes to the process of developing film in the darkroom, generating the “reality” of the past, and the “black box” of the generative AI algorithm, simulating the moving images of the future. Layering audio from NASA’s Milky Way sonification data, this work zooms out to the universe, composed mostly of dark energy and dark matter. This video interweaves human and non-human agents to reimagine a disappearing building into a planetary imagery in abstraction, which reminds us that we live in a world with assemblages of beings in sympoiesis.

Technical Description:

In this project, I began by using photogrammetry technology to scan a building near the Bund area in Shanghai, China. All the residents of the building had been forced to move out due to ongoing gentrification policies. After digitizing part of the building’s interior architecture, I used Blender, an open-source 3D modeling software, to clean the photogrammetry 3D model and reconstruct a new synthetic architectural space based on the real scan data.

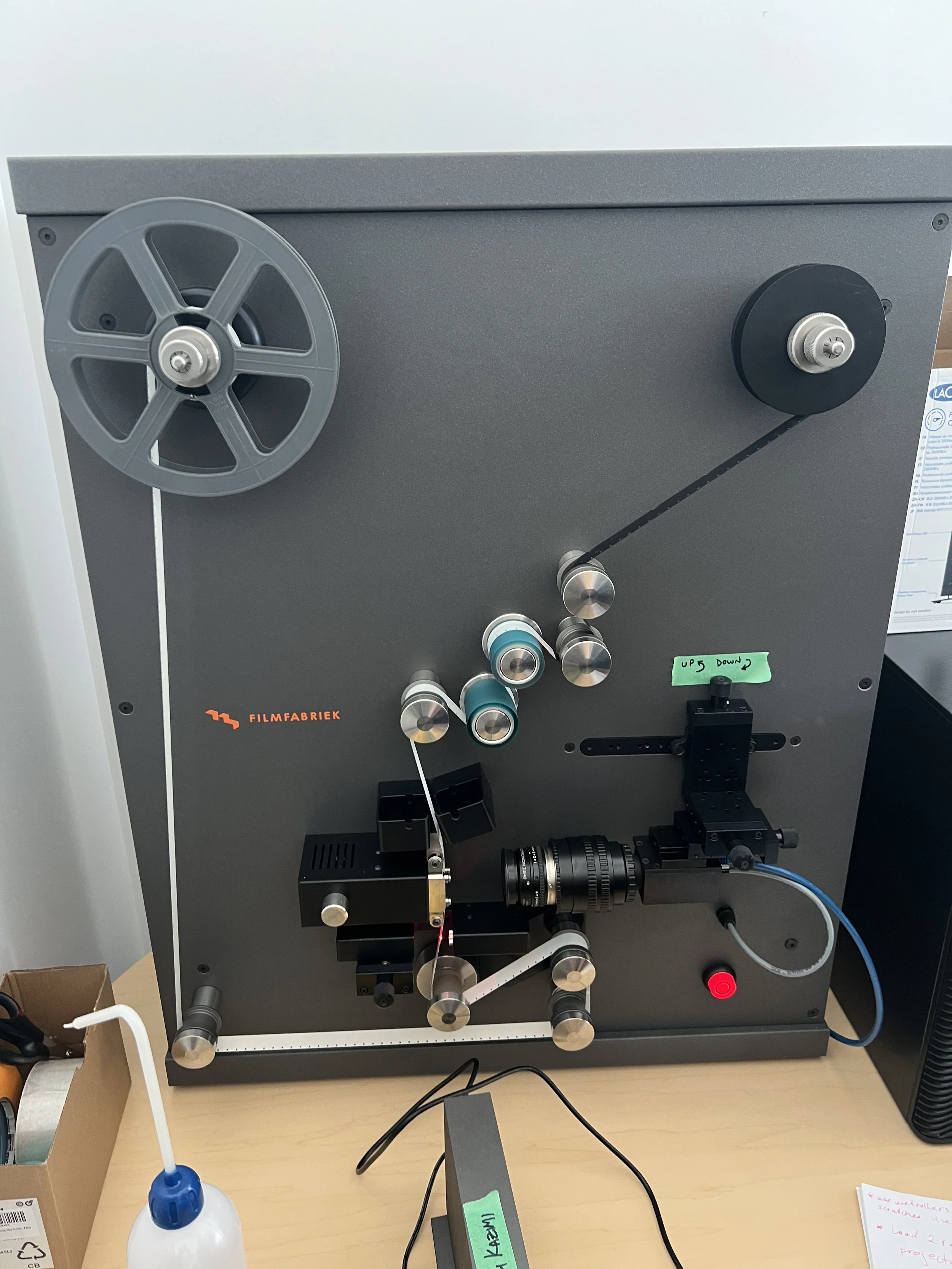

Once the reconstructed 3D environment was rendered digitally, I used a 16mm Bolex camera to shoot footage of the digital visuals. I then hand-processed the 16mm film footage in a darkroom. During this manual processing, scratches and other forms of contamination were introduced to the film. I digitized the 16mm film using a scanner and identified the damaged segments in the digital files. I used Photoshop AI tools to edit the ruined footage and exported the results to Runway AI for animation. Runway AI was also used to stitch the various clips together, creating smooth transitions between them.

Finally, I edited the audio for the video using NASA’s publicly available Milky Way sonification data. This entire process weaves together digital and analog technologies to reimagine the disappearing building on East Beijing Road in Shanghai.

Process of capturing re-constructed 3D environment and hand-processing 16mm film